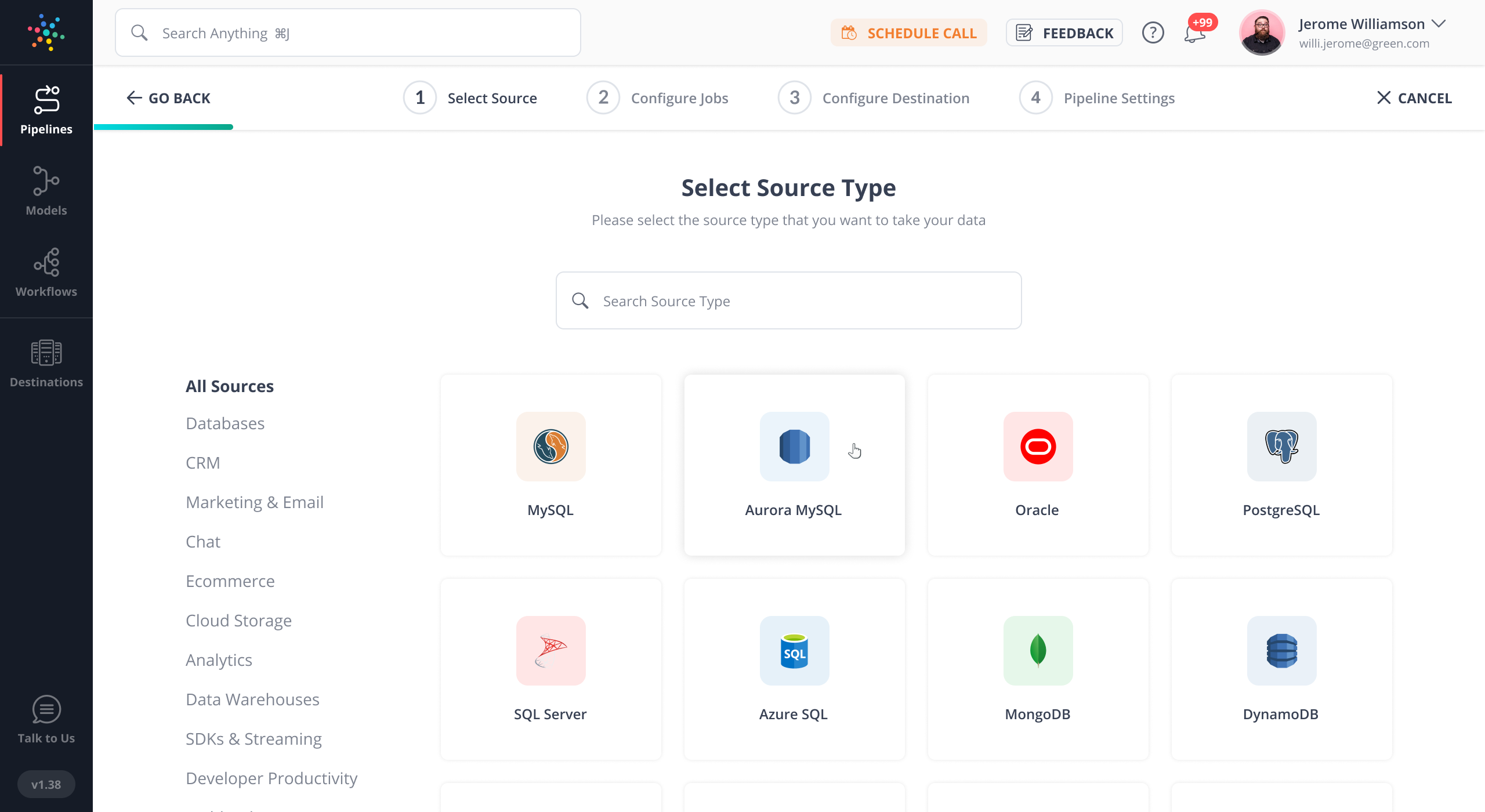

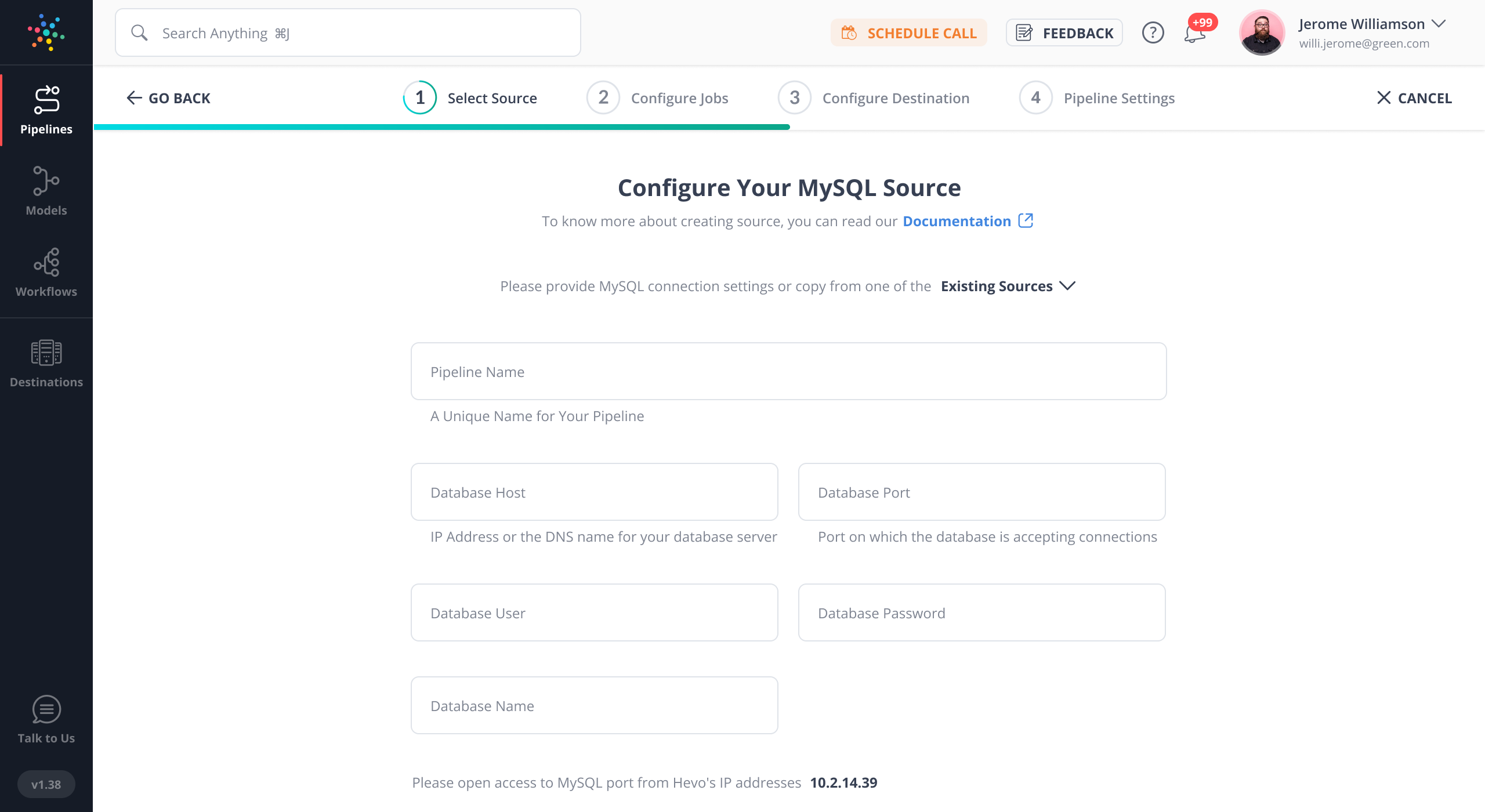

Hevo is a no-code data pipeline platform that helps new-age businesses integrate their data from multiple sources to any data warehouse in almost real-time. The platform supports 100+ ready-to-use integrations across Databases, SaaS Applications, Cloud Storage, SDKs, and Streaming Services. Try Hevo today and get your fully managed data pipelines up and running in just a few minutes.

Hevo Data

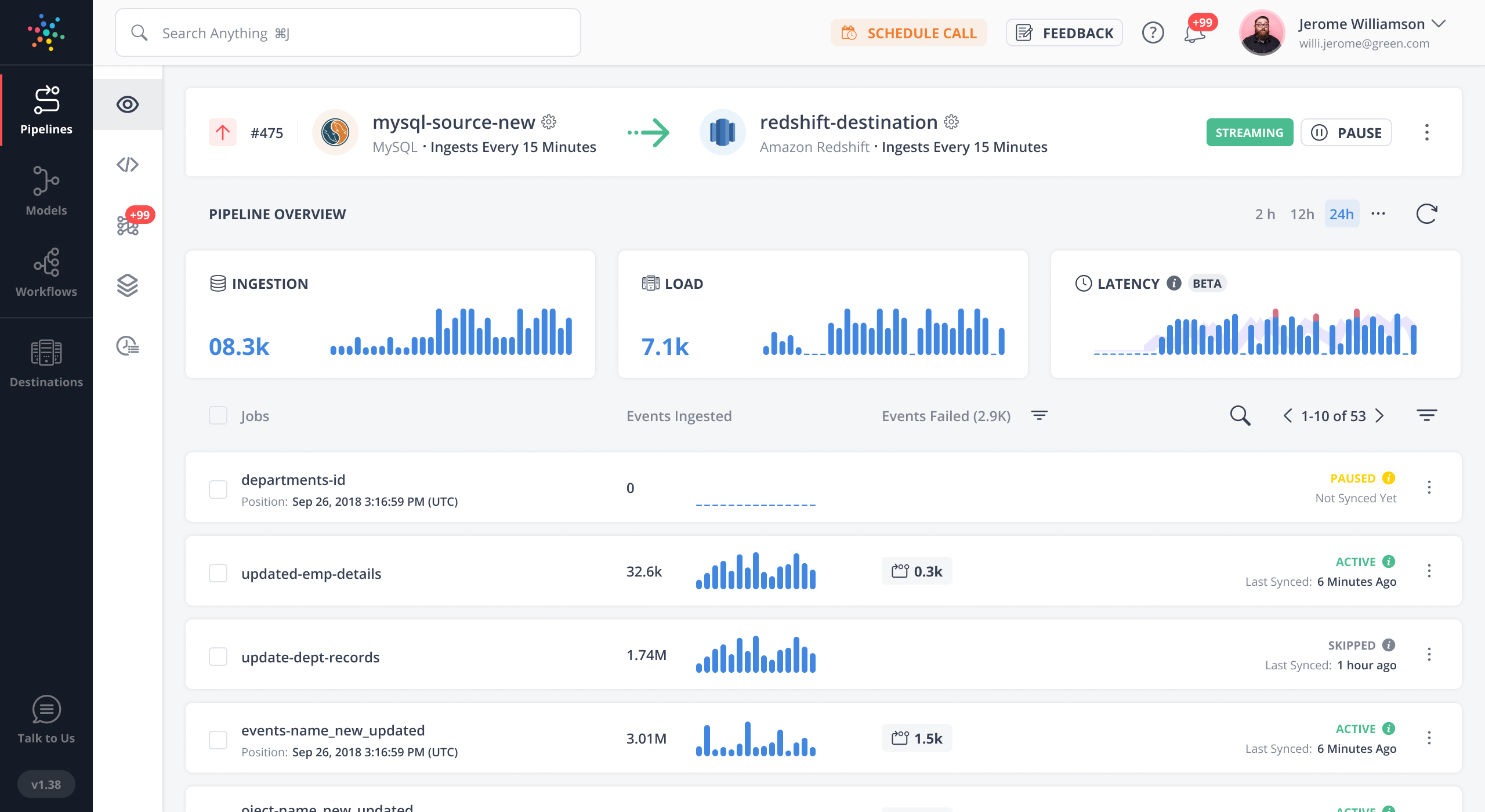

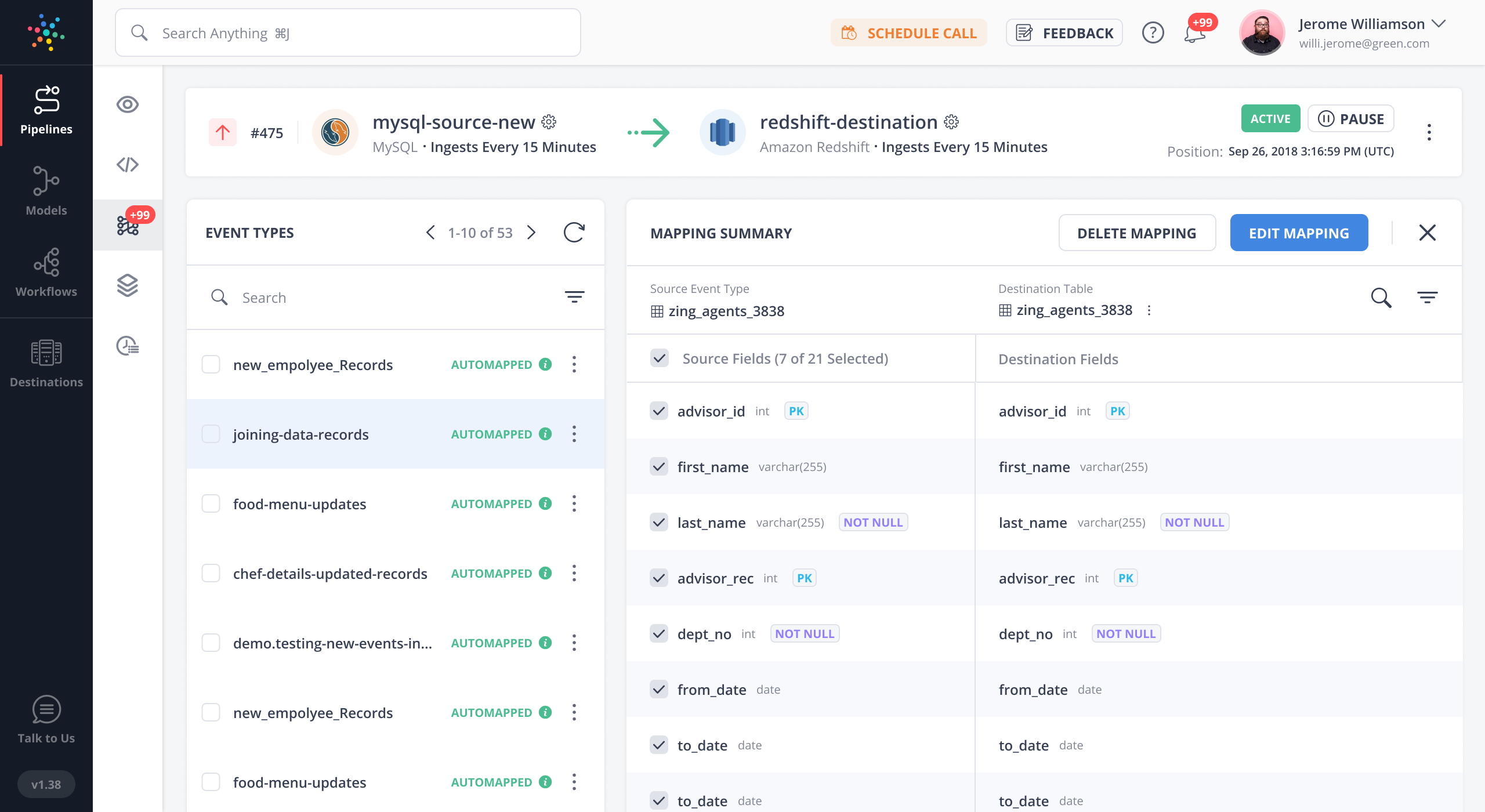

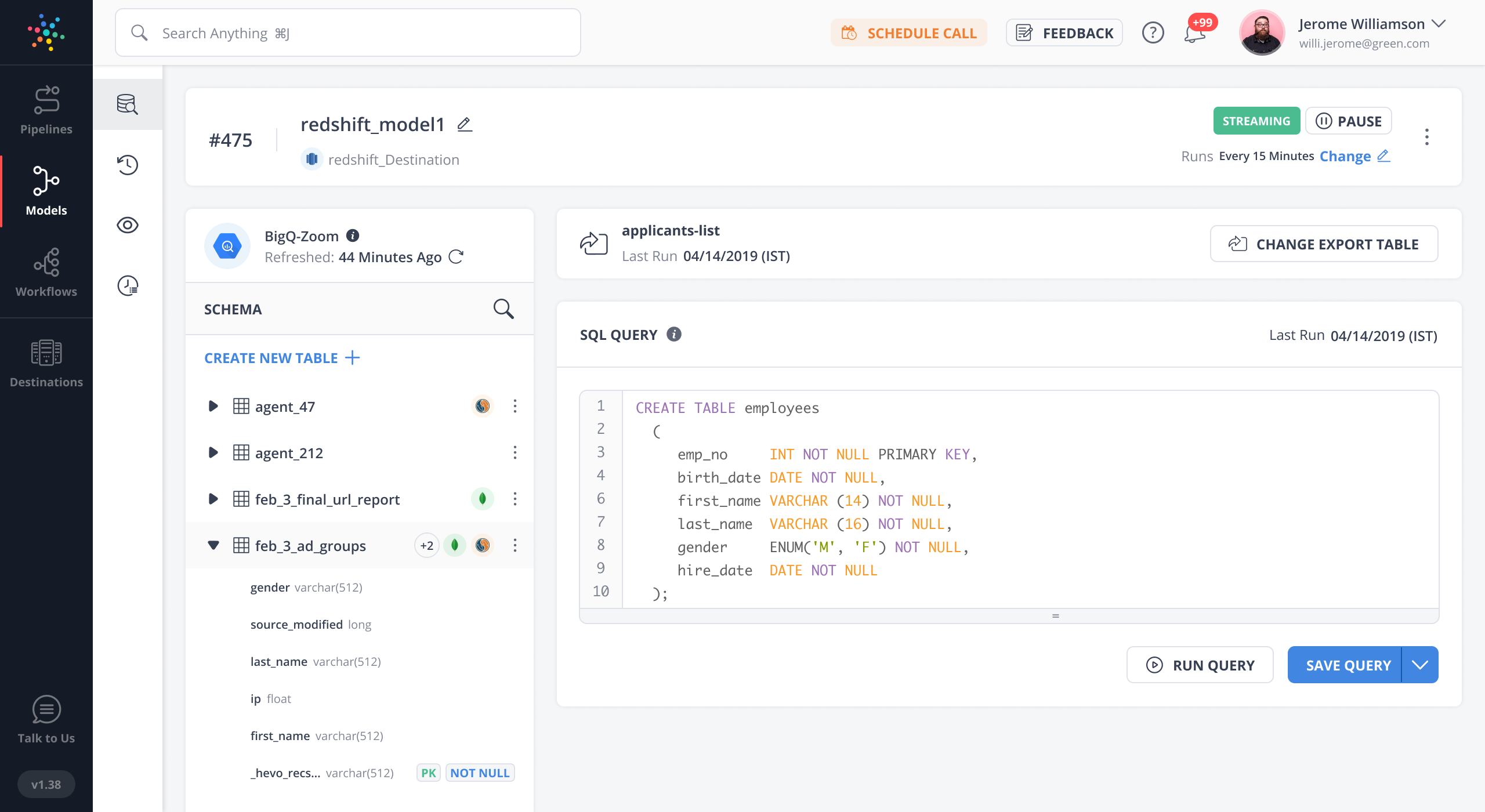

Images

Check Software Images

Customer Reviews

Hevo Data Reviews

Artemiy K.

Advanced user of Hevo DataWhat do you like best?

It has suited all our needs.

- The most critical part was reading Oplog from production MongoDB replica.

- Cleaning PII and sensitive data on the fly before loading to DWH

- Wide range of sources and even Webhooks (any arbitrary source)

What do you dislike?

There is always room for further development.

As an advanced user with tempted view I can point to certain things to work on:

- import any arbitrary library and code to perform transformations on-the-fly (now only Python 2 available)

- perform historical load with filters (what if I need to replicate only 100 rows out of 100M)

What problems are you solving with the product? What benefits have you realized?

First of all, we need to read replica log from source database to minimize load on production databases. Be it MongoDB's Oplog or PostgreSQL WAL.

Secondly, we want to get rid of any PII data as well as perform specific calculations on the fly. That's where Hevo's transformations come handy.

Lastly, we want reliable, clear and consice panel, service, and support which we get fully from Hevo team.