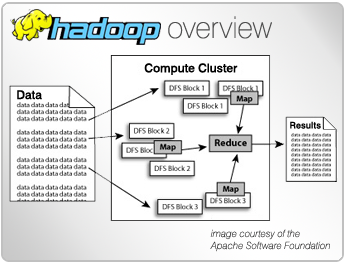

Hadoop HDFS is a distributed, scalable, and portable filesystem written in Java.

Hadoop HDFS

Images

Check Software Images

Customer Reviews

Hadoop HDFS Reviews

AAKASH C.

Advanced user of Hadoop HDFSWhat do you like best?

Distributed storage and resilience of the data.

Handling multiple file formats like parquet and avro and the connectivity with the other systems.

Its integration with the hadoop map reduce and the processing frameworks like spark.

Syntax similar to normal file system if you have knowledge about unix or other file system.

Easily Scalable.

Easy to learn and configure.

Can be used with traditional mapreduce as well as latest frameworks like Spark, Storm and Scalding.

Can be setup on a virtual private cloud instance

They are also very cost effective.

What do you dislike?

Setup and the syntax are little bit slow to learn if you are not from familiar background.

There are other better options like S3 and others for storage if you are using cloud like AWS.

Issues with small files.

Support for batch processing. No real time data processing.

Architecture is little complex i.e. all the terms like name node, data node difficult to grasp in first time.

Need to have knowledge of different components if you want to utilize the full power of hdfs.

Sometimes there is data loss too if you dont configure the rack and replication factor properly.

Documentation stuff is little difficult to understand for the first time user.

What problems are you solving with the product? What benefits have you realized?

My main problem that hdfs solved is of storing the TBs of banking data and allowing it to process smoothly using Spark.

Problems of storage of large files on a single system.

Also the problems when writing the data using spark using the different compression techniques like gzip and snappy.

Easy to access the data from hdfs while processing. Storage of files with multiple file formats is a benefit.

Also the main benefit is partitioning i.e. the data is stored in the partitions.

Can be setup on a single standalone machine too.

Free and open source

Performant and cheap and easy to use.