Databricks is the data and AI company. More than 5,000 of organizations worldwide — including Comcast, CondГ© Nast, Nationwide, H&M, and over 40% of the Fortune 500— rely on Databricks’ unified data platform for data engineering, machine learning and analytics. Databricks is headquartered in San Francisco, with offices around the globe. Founded by the original creators of Apache Spark™, Delta Lake and MLflow, Databricks is on a mission to help data teams solve the world’s toughest problems.

Databricks

Images

Check Software Images

Customer Reviews

Databricks Reviews

Prashidha K.

Advanced user of DatabricksWhat do you like best?

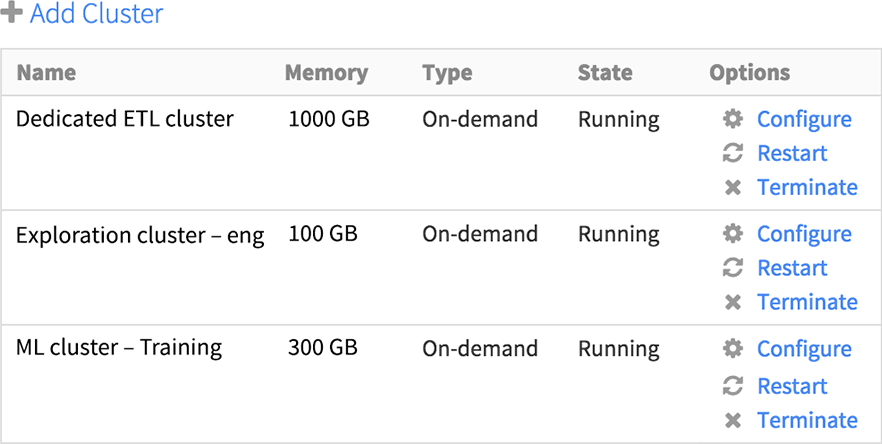

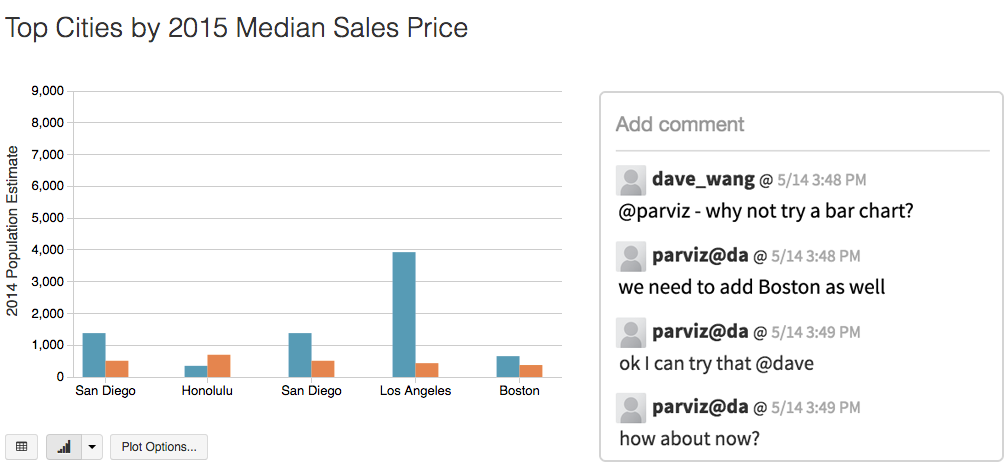

Databricks had very powerful distributed computing built in with easy to deploy optimized clusters for spark computations. The notebooks with MLFlow integration makes it easy to use for Analytics and Data Science team yet the underlying APIs and CICD integrations make it very customizable for the Data Engineers to create complex automated data pipelines. Ability to store and query and manipulate massive Spark SQL tables with ACID in Delta Lake makes big data easily accessible to all in the organization.

What do you dislike?

It lacks built in data backup features and ability to restrict data access to specific users. So if anyone accidentally deletes data from Delta Table or DBFS, the lost data cannot be retrieved unless we setup our own customized backup solution.

What problems are you solving with the product? What benefits have you realized?

I have worked with big data with hundreds of millions of rows using databricks. We do most of the ELT, data cleaning and prepping works on databricks. The ease and speed of querying bid data using databricks SparkSQL is very useful. It is also very easy to create prototype codes utilizing real sized data using the available Python and R notebooks.